There is an apocryphal tale I've heard many times about how, in Ancient Rome (or, in some tellings, Greece), the engineers responsible for the construction of an arch were required to stand underneath it as the final wooden supports were taken out. In some version of the story, not only was the engineer required to stand underneath it, but so was the engineer's family. In the case of the Veresk bridge in Iran, some believe that the Shah forced the chief engineer to stand under the bridge with his family as the first train went over it. Whether any of these instances actually happened or not, the stories tell us something about the cultural perspective of the obligations that engineers have to society as a whole.

In 1920s, after the Quebec Bridge disasters, a professor at the University of Toronto convinced the Engineering Institute of Canada to come up with a ceremony and a code of ethics that all its graduating engineers would be required to undergo. This ceremony became the Ritual of the Calling of an Engineer. This ritual is similar to a much more ancient tradition of the Hippocratic Oath undertaken by physicians which states their obligations to those under their care above and beyond their obligations to anyone else. It seems clear, at least to me, that there is a cultural understanding of the obligations of civil engineers and architects to the general public. Take the case of the Sampoong Department Store Collapse in 1995, the most lethal building collapse since the collapse of the Circus Maximus in 140 CE. In this instance, not one but two different construction firms refused to complete work that they believed to be unsafe and resigned -- or were fired -- after they did so. Five years after the building opened to the general public, it collapsed, killing more than 500 people.

All of the major professional engineering societies have codes of ethics that they require their members to obey which include statements of engineer's obligations to the general public. "Engineers shall hold paramount the safety, health and welfare of the public and shall strive to comply with the principles of sustainable development in the performance of their professional duties," from the American Society of Civil Engineers, is one example, but the language is similar between them.

Even the professional societies for software engineers have such statements; the Association for Computer Machinery: "Software engineers shall act consistently with the public interest... [approving] software only if they have a well-founded belief that it is safe, meets specifications, passes appropriate tests, and does not diminish quality of life, diminish privacy or harm the environment. The ultimate effect of the work should be to the public good." The Institute of Electrical and Electronics Engineers: "We, the members of the IEEE... accept responsibility in making decisions consistent with the safety, health, and welfare of the public, and to disclose promptly factors that might endanger the public or the environment."

Unlike our brothers and sisters in the other engineering disciplines, though, software engineering does not have the same history of mandatory formal education. Indeed, many hackers -- myself included -- take pride in the fact that the software community takes in both the self and formally-trained. Perhaps for that reason, we do not have anywhere near the same level of membership in professional societies as the other engineering disciplines.

I would wager good money that almost every single person reading this has written code that was deployed into production before they were happy with how it was written and confident that it had no bugs in it. For that matter, I would wager the same amount that most of us have had code deployed to production which we knew had bugs in it, but were pressed to do so anyway.

I can see the objections many of you are writing me right now: "But, it's not the same! Sure, people who program code that go into medical devices, or power the space shuttle, they should definitely be careful. But I just write code that powers a video game, or an advertising network, or a new social network for dogs. It can't hurt anyone!"

Let me be clear up front: You're right, in part. Code which has life-safety implications should be held to a very much higher standard than code which isn't. I certainly hope that the code which is inside an implantable defibrillator has gone through a hell of a lot more code checking and QA than the thrown-together code I've written for online advertising. But making the leap from that to "my code can't harm people" is a bridge too far.

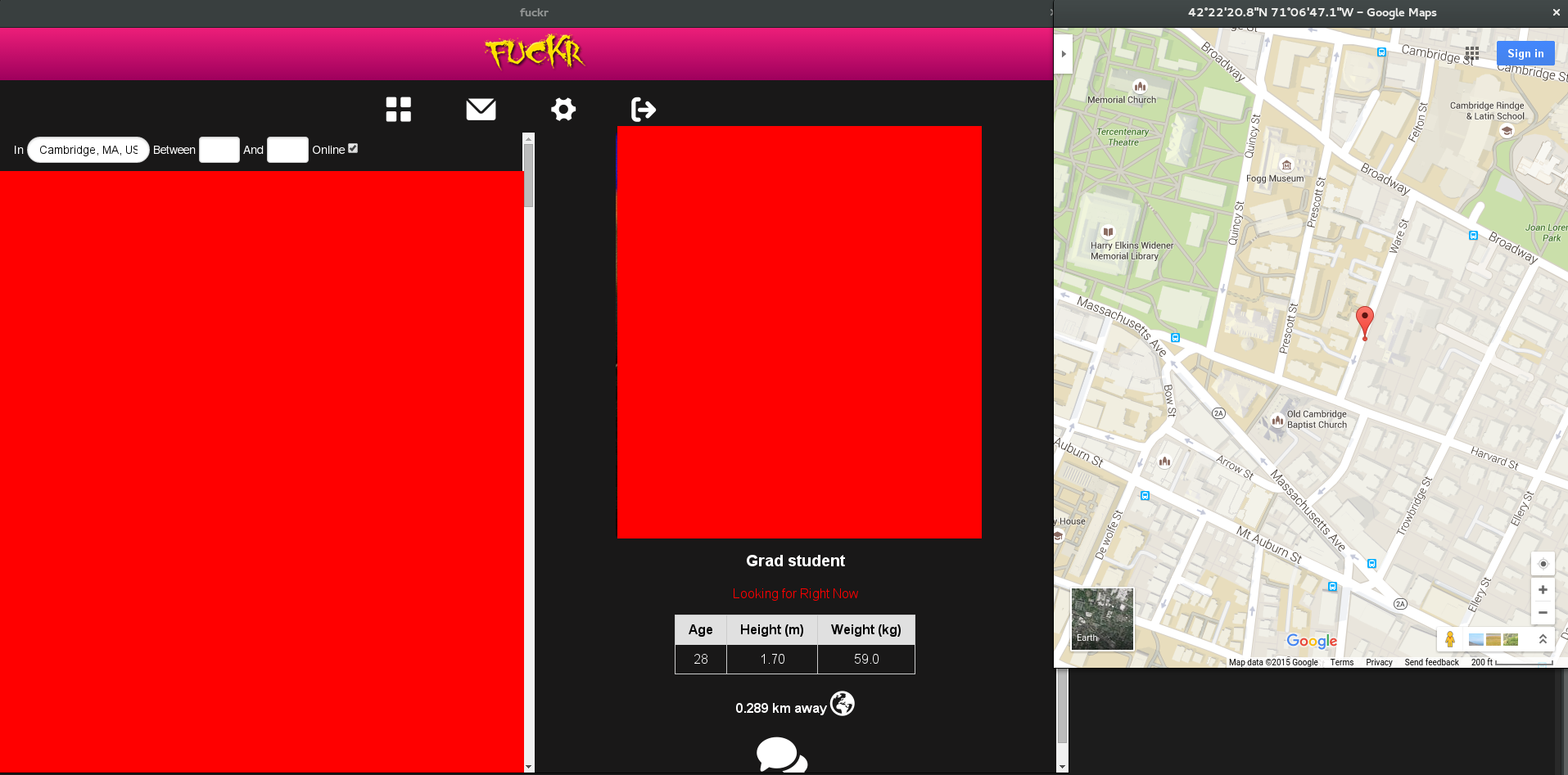

Look at the recent spat of security vulnerabilities that have leaked countless people's personal information. From OPM to Target, T-Mobile to Home Depot, it seems that it's never more than a couple of weeks between a new report of some catastrophic information leak these days. But it's not only security vulnerabilities that we have to think about. Let's take a hookup app as an example. On the surface, there's not a whole lot to be concerned about here. People are free to give as much -- or as little -- information about themselves as they want; they can select pictures which show their faces, or not, and the only bit of information revealed about them other than what they choose is the number of feet away that a user is from you. Not particularly sensitive information.

However, it's quite easy to turn this relatively harmless piece of software into a very dangerous tool for the users. Take Grindr, for example. In 2014, it was publically revealed that you could query the Grindr API without any authentication and retrieve a list of users near you, along with their distances to you, out to several decimal places of accuracy. In addition, you could query the API -- again, without any authentication -- and give it an arbitrary latitute and longitude of /your/ current location. These two things combined meant it was trivial to triangulate the position of arbitrary users within a couple feet of accuracy [1]. Because Grindr targets the gay community, this quickly became a life-threatening vulnerability. Police in Egypt were recorded using this functionality to track down and arrest -- or extrajudicially punish -- gay people [2]. Though they disabled the "distance" feature for several months, it has been reactivated, though blocked in several countries. It is still possible to triangulate the position of arbitrary Grindr users today.

This is only one example of how a seemingly harmless feature can be extremely dangerous if they're not carefully coded and the ramifications thought out carefully. There are countless more out there; I'm sure every one of us can think of some feature that we have written at one point or another that could be misused to the detriment of our users.

Do we, as software engineers, have the same kind of moral responsibility as our fellow engineers in other disciplines? Should we refuse to build systems which can be turned against users, even if it means our jobs? How do we, as a profession and as a community, balance our responsibilities to our employers and to the general public? If we were required to "stand under the arch", as it were, how many of us would have pushed back against things that our employers asked us to do?